Overview

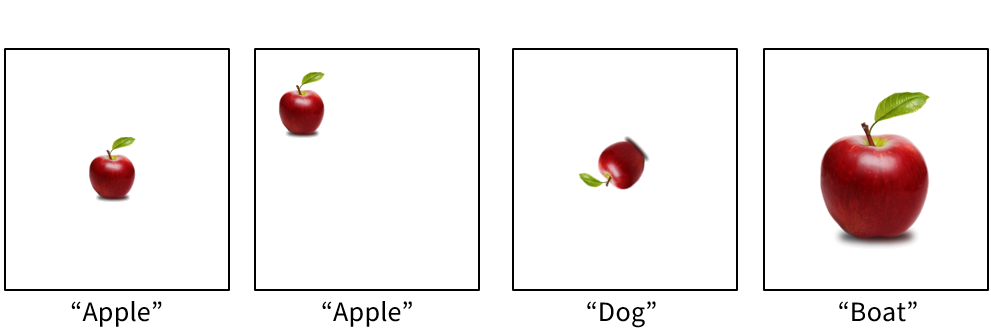

There are many applications where we want the neural network to have certain symmetries, such as in Fig.~figure. Most applications come with natural symmetries. It might be rotation-translation invariance for a medical diagnosis application that detects tumors in X-ray images or time invariance for a weather forecasting system.

The desired symmetry might not be an actual invariance in that the output is not expected to remain the same but instead transform in some manner similar to the input. For example: in an image enhancement application the output image is expected to rotate and translate along with the input image. Some authors use invariance to denote both cases, we will use the term equivariance (as in: transforms with) to refer to both and use invariance for the special case where the output should stay the same.

Expressing many of these symmetries on discrete domains is awkward, indeed rotations and translations of an image are not even well defined unless the translation is on grid or the rotation is in [math]90^\circ[/math] increments. So instead we will be working in the continuous setting and only discretizing when it becomes time to implement our ideas. For images, instead of representing them as elements of [math]\mathbb{R}^{H \times W}[/math] we represent them as elements of [math]C_c^\infty(\mathbb{R}^2)[/math], i.e. smooth functions with compact support. Our eventual practical goal for this chapter is building a type of CNN that is not only translation equivariant but also rotation equivariant. But being mathematicians we want to be general and develop a theory that allows for other transformations as well. This brings us to the theory of Lie groups, which are essentially continuous transformation groups, something we will make precise in this chapter. Lie group theory plays an important role in many disparate fields of study such as geometric image analysis, computer vision, particle physics, financial mathematics, robotics, etc. In the next sections we will build up a general equivariance framework based on Lie groups. The payoff of this theoretical work will be a general recipe for building a neural network that is equivariant with respect to an arbitrary transformation group: a so called Group Equivariant CNN [1][2], or G-CNN for short.

General references

Smets, Bart M. N. (2024). "Mathematics of Neural Networks". arXiv:2403.04807 [cs.LG].